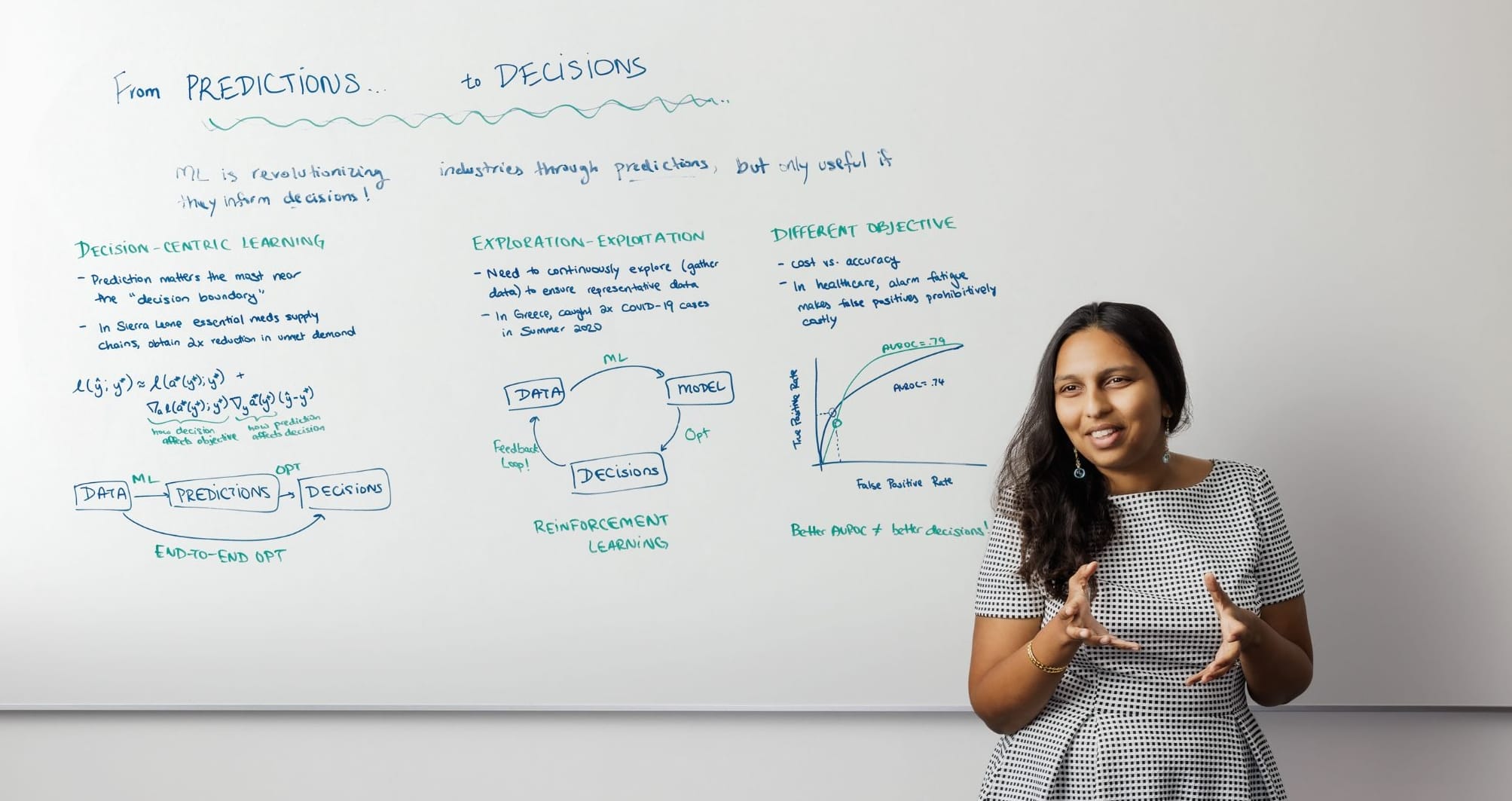

Artificial intelligence can be essential in solving a vast array of business problems, but in most cases, a simple “plug-and-play” approach to AI is inefficient or even counterproductive. Operations, information, and decisions assistant professor Hamsa Bastani explores solutions for making the most of AI in both her undergraduate Introduction to Management Science course and lectures for Executive Education. “A lot of businesses haven’t seen value coming out of machine-learning predictions,” she says. “A key reason is because not all predictions are useful — only the ones that inform specific decisions.”

Through studies with health-care practitioners, Bastani identifies three challenges that businesses in any sector may confront. For the National Medical Supplies Agency in Sierra Leone, which sought to optimize its medical-supply chain, the goal was to forecast demand and allocate limited inventory to make sure health facilities had the medicines they needed. What was required, Bastani learned, was a “decision-centric” end-to-end model that placed additional weight on facilities that were historically understocked. When she aligned machine learning with that objective, the unmet demand for essential medicines was reduced twofold.

A separate study in Greece aimed to identify international travelers entering the country with COVID-19 in the summer of 2020. Data was gathered, predictive models were developed, and decisions were based on those models. But if the data isn’t continuously updated, the information used to inform those models quickly becomes stale. Bastani uses a relatable example: “If Netflix doesn’t recommend a particular movie, you might never watch it, and Netflix might never know that you liked it.” This self-censoring feedback loop can limit AI’s effectiveness. When Greece disrupted that loop by updating and expanding its data analysis, it caught twice as many positive COVID cases.

Certain AI metrics might also cause more harm than good, depending on your objectives. Models used by Penn Medicine and other health-care systems to detect patient risk can trigger false alarms, which take up valuable time for providers and erode their trust in AI tools. Researchers learned that AUROC — a measurement that usually indicates positive performance in AI — could lead to those faulty alerts. In the end, it was better to use a model that has lower AUROC but generates fewer false alarms.

Bastani encourages managers deploying AI to ensure that everyone along their AI operations chain communicates regularly to confirm it’s performing as intended. “There’s this notion that as long as we build a good predictive model, you’re done,” she says. “But you need to do it in a way that’s aware of the downstream decisions that this prediction is going to impact.”

Published as “At the Whiteboard With Hamsa Bastani” in the Fall/Winter 2022 issue of Wharton Magazine.